Introduction to the theory of computation solutions – Delving into the realm of computation, the theory of computation solutions unveils the fundamental principles that govern the capabilities and limitations of computers. This comprehensive guide provides an in-depth exploration of this captivating field, empowering you with a profound understanding of the theoretical underpinnings that shape the digital world.

From the intricacies of Turing machines to the complexities of formal languages, this discourse unravels the intricate tapestry of computation, illuminating its profound implications for real-world applications and the frontiers of computer science.

1. Theoretical Foundations: Introduction To The Theory Of Computation Solutions

The theory of computation provides a mathematical framework for understanding the fundamental concepts of computation. It investigates the capabilities and limitations of computing devices, such as Turing machines and finite automata, and explores the role of mathematical logic in defining and analyzing computational processes.

These concepts form the basis for understanding the nature of computation and its applications in various fields.

Mathematical logic plays a crucial role in the theory of computation. It provides a formal language for describing computational processes and reasoning about their properties. Concepts such as propositional and predicate logic, set theory, and automata theory are essential for understanding the foundations of computation.

The theory of computation has wide-ranging applications in computer science and beyond. It provides the theoretical underpinnings for areas such as algorithm design, programming language design, compiler construction, and artificial intelligence. By understanding the fundamental principles of computation, we can better understand the capabilities and limitations of computing devices and develop more efficient and reliable algorithms and systems.

2. Automata Theory

Automata theory is a branch of the theory of computation that deals with the study of abstract machines known as automata. Automata are mathematical models that can be used to represent and analyze computational processes. Different types of automata have varying capabilities and limitations, and they are used to model different aspects of computation.

- Finite automataare the simplest type of automata. They are used to recognize regular languages, which are sets of strings that can be described by a regular expression.

- Pushdown automataare more powerful than finite automata. They can recognize context-free languages, which are sets of strings that can be generated by a context-free grammar.

- Turing machinesare the most powerful type of automata. They can recognize any language that can be defined by a formal grammar. Turing machines are also used to define the concept of computability.

Automata theory has applications in various areas of computer science, including language recognition, compiler design, and artificial intelligence. By understanding the different types of automata and their capabilities, we can better understand the nature of computation and develop more efficient algorithms and systems.

3. Formal Languages

Formal languages are sets of strings that can be described by a formal grammar. They are used to model the syntax of programming languages, natural languages, and other types of data. Formal languages are classified into different types based on their generative power, with the Chomsky hierarchy being the most widely used classification system.

The Chomsky hierarchy consists of four levels:

- Type 0: Unrestricted grammars

- Type 1: Context-sensitive grammars

- Type 2: Context-free grammars

- Type 3: Regular grammars

Each level of the hierarchy is more restrictive than the one below it, with type 0 grammars being the most powerful and type 3 grammars being the least powerful. Formal languages are used in various areas of computer science, including compiler design, natural language processing, and artificial intelligence.

By understanding the different types of formal languages and their properties, we can better understand the structure and meaning of data.

4. Computability Theory

Computability theory is a branch of the theory of computation that deals with the study of computable functions. A computable function is a function that can be computed by a Turing machine. The halting problem is a famous problem in computability theory that asks whether there is a Turing machine that can determine whether any given Turing machine will halt on a given input.

The halting problem is undecidable, meaning that there is no Turing machine that can solve it.

The Church-Turing thesis states that any function that can be computed by a Turing machine can also be computed by a lambda calculus expression. This thesis has important implications for the theory of computation, as it provides a way to compare the computational power of different models of computation.

Computability theory has applications in various areas of computer science, including algorithm design, programming language design, and artificial intelligence. By understanding the concept of computability and the limitations of computation, we can better understand the nature of computation and develop more efficient algorithms and systems.

5. Complexity Theory

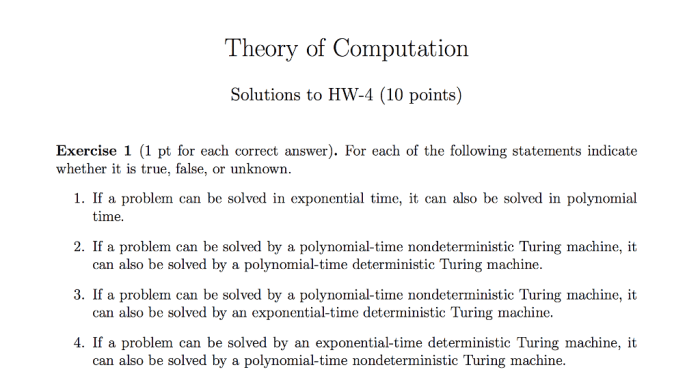

Complexity theory is a branch of the theory of computation that deals with the study of the computational complexity of problems. Computational complexity is a measure of how difficult it is to solve a problem on a given computational model.

Complexity theory classifies problems into different complexity classes based on their computational complexity.

The most important complexity class is P, which consists of problems that can be solved in polynomial time. Polynomial time means that the running time of the algorithm that solves the problem is bounded by a polynomial function of the input size.

Another important complexity class is NP, which consists of problems that can be verified in polynomial time. Verifying a solution to a problem means checking whether the solution is correct.

The P versus NP problem is one of the most important unsolved problems in computer science. It asks whether every problem in NP can also be solved in P. If P = NP, then there would be a polynomial-time algorithm for every problem that can be verified in polynomial time.

This would have significant implications for computer science, as it would mean that many problems that are currently considered intractable could be solved efficiently.

Complexity theory has applications in various areas of computer science, including algorithm design, cryptography, and artificial intelligence. By understanding the computational complexity of problems, we can better understand the limits of computation and develop more efficient algorithms and systems.

FAQ

What is the significance of the halting problem in computability theory?

The halting problem is a fundamental limitation in computer science, demonstrating that there is no algorithm that can determine whether an arbitrary program will halt or run indefinitely.

How does automata theory contribute to natural language processing?

Automata theory provides a formal framework for understanding the structure and recognition of languages, which is essential for developing natural language processing systems that can effectively handle complex linguistic structures.

What is the practical relevance of complexity theory?

Complexity theory provides insights into the computational resources required to solve different types of problems, guiding the design of efficient algorithms and helping to determine the feasibility of solving complex problems within practical time constraints.